What is Programming?

By Verm

Tl;dr — Computers are extremely fast and completely stupid. To make use of a computer’s speed, a human has to provide all of the intelligence. Providing this intelligence is the exact art and subtle science of programming.

I have a restaurateur friend (let’s call him K for now) who has done well for himself in life. He lives in a nice house and is able to afford the services of a full-time butler/gopher of sorts. This guy takes care of his laundry, odd jobs, and such. One of these evenings, a few of us were all just relaxing after one hell of a party, and we felt like having some Maggi. Suresh the Butler was summoned and instructed to make a lot of Maggi. Now I should tell you that Suresh wasn’t the sharpest fellow you might ever meet. He might actually be closer to the bluntest fellow you might meet. What followed was the funniest conversation I have ever heard with Suresh getting into grisly details like “What is yellow packet”, “What exactly is all of us’”, “How to open the cupboard”, “how exactly is the packet to be opened” etc etc and the mix of rage and despair creeping over K’s face. At the end of all that, though, we got some pretty good Maggi.

That conversation has stuck with me as a pretty accurate description of what programming is. We have Suresh The Computer that can do pretty much anything we want but must be told in a maddeningly precise manner about how to do it. And we have P The Programmer who can create the next Facebook or Google if only he can explain to Suresh what “Yellow” really, really means. Programming is the process of telling a computer exactly what activities it must do so that we can achieve our desired goal.

To understand programming, we first need to understand a little bit about computers.

The prevalent image of a computer is a box attached to a screen and a keyboard and a mouse and a printer and so on. The fact is that all of these things are accessories to let humans use a computer (Cloud computers, for example, have no monitor or keyboard or mouse). The actual computer, the real heart of it all, is just the Intel/AMD/whatever else microprocessor chip sitting on the motherboard. That is where all the “computing” happens.

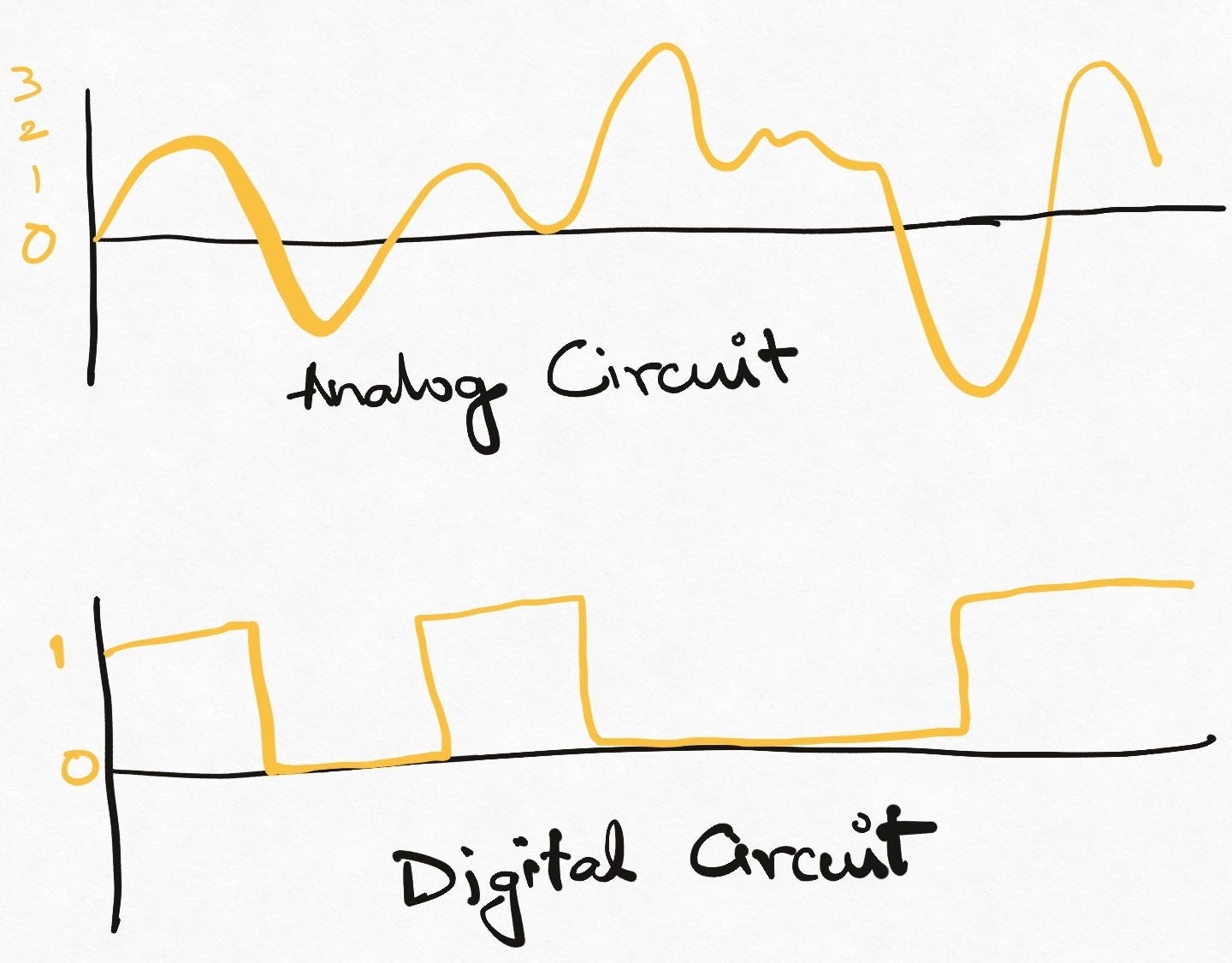

The other interesting bit (pun intended) about computers is that they are digital. This means that they can be either on or off. There are no half states. This is different from analog machines like the volume knob on a speaker or a throttle on a motorcycle which has many levels through which it can be gradually rotated. Computers are not like that — they are either computing, or they are not. In other words, their output is Binary — zero or one.

The simplest digital component is literally a switch. On and off. All electronic devices, computers (i.e. the microprocessor) are made up of special types of switches called Logic Gates. A logic gate is a little circuit that has ’n’ inputs, one output, and the ability to decide what should be the output depending on its inputs. Already beginning to sound like programming, isn’t it?

There are some fundamental types of gates like AND (output is ‘ON’ if all inputs are ‘ON’), OR (output is ‘ON’ if any input is ‘ON’), NOR (output is ‘ON’ if all inputs are off) and so on. Integrated circuits (or ICs) combine millions of gates into intricate patterns using principles called De Morgan’s Laws to create a vast variety of input and output combinations. Microprocessors compose many ICs to provide even richer combinations. These are incredibly complicated arrangements of logic gates and it takes a fat book to describe what will happen if you change one input in this way or that. Understanding it takes a superhuman electrician, also known as an Electronics Engineer/Hardware Engineer.

Despite all this complexity, this hardware does not have any intent/purpose. It is a tool, and the software engineer is now called upon to make this equipment do something useful. The job of the programmer is to analyze a problem, break it down into a specific set of steps, each of which involves flipping one or more switches (gates) so that we get the desired output.

E.g. To open a website in your browser,

Step 1: Turn input 100346 to zero.

Step 2: Turn inputs 3454562348 and 846453 to one simultaneously, and immediately change input 4733 to off.

Step 3: ……

This is obviously a made-up example, but the reality is not incredibly different. Programming is the process of writing a set of instructions that can manipulate the microprocessor inputs in a certain way to achieve a specific final output.

Early programmers worked at this level of complexity and made tremendous advancements in the field of computing. Football field sized computers and punched cards are relics of this era. But as you might imagine, it takes a special kind of genius to do anything in this way. Much like multiplying large Roman numbers, the task was complicated, error-prone, and difficult to explain to other humans. This severely limited what we could practically do with computers. Programmers needed a better way to talk to computers, and this led to the development of programming languages.

Assembly language is among the lowest level programming languages there is today. While it is still very close to how the hardware is built, it allows programmers to say things like mov eax, 3 (Put the value 3 in the location named eax in the circuit) or add eax, ebx, ecx (Add the values at locations named eax and ebx in the circuit and put the result at the location named ecx) instead of talking about every single input and output in the hardware – i.e it has a higher level of abstraction. It has the concept of data and supports a set of operations that can be performed on this data – a huge improvement from on/off-based programming.

The story of programming languages is the story of increasing levels of abstraction in programming. Abstraction is the process of hiding the complexity of something behind something simple. Modern programming languages offer very high-level statements that impossible though it sounds, still map to the on/off of the computer hardware. Something trivial looking System.out.println(“Hello World!”) (A statement in Java language to print ‘Hello world!’ on the screen) goes through hundreds of transformations to generate possibly thousands of little on/off combinations that cause the pixels on the screen to glow in that specific way.

Programming languages, just like real-world languages, have different flavors and do different things well. Some are formal (Java), some are flexible (Perl), some are easy to learn (Python), and so on. Programmers choose languages depending on their preference of style, and on the problem, they need to solve.

A program, once written in any programming language, is typically passed through a compiler (sometimes an interpreter, but never mind that for now) to generate the exact on-off instructions which the computer can execute to find the smallest number in any given set of numbers. Compilers are typically written by the inventors of a programming language and are the foundation of all high-level programming languages. Without compilers, we would have to write the same program separately for each type of machine. Compilers take care of this problem in one go by being machine-specific themselves so that our programs don’t have to be. They allow programmers to rise above the minutiae of hardware to a more human-friendly level of expression.

Now let’s look at the actual purpose of programming. Programs are written to solve problems that would take humans too long to solve. How does this work? The first phase is to figure out a step-by-step way to solve the problem, regardless of the programming aspect. The “step-by-step” is really REALLY important because any hand-waving here will just not work because we eventually need to translate these steps into the language of switches — on and off. The solution, described in such a manner, is called an Algorithm. Algorithms are not just the realm of artificial intelligence and social media feeds and so on. “Algorithm” is just a fancy way of saying “solution to the problem”.

Let’s imagine that we have a bunch of numbers and we want to find the smallest number among them. How can we do this? One way is to pick one of the numbers randomly, compare it with all the other numbers, and see if it is the smallest number. If not, we repeat this process till we find the smallest number.

Note how we did not say “find the smallest number and show it as the output” because it is not clear “how” that can be done. There’s a reason programmers are pedantic about things — that’s the only kind of communication a computer understands.

The algorithm described above will work but intuitively seems very inefficient. If we lots of input of numbers, randomly searching for the smallest numbers might take very long. In programmers, we say this algorithm has high time complexity (takes too damn long to run).

Here’s a better solution. We go over all the input numbers one by one and keep the smallest number we have seen so far in our hands. If we see a smaller one, we pick it up and drop the one in our hands. At the end of just one cycle overall number, we will have the smallest number.

This algorithm is much faster (lower time complexity) but needs space in our hands so that we can hold on to the smallest number we have seen so far. In the computer world, this space is represented by memory (RAM) and prgrames refer to the amount of memory required by an algorithm as its space complexity. Space and time complexity can often be traded-off depending on what computing resources we have in abundance and what is scarce.

Here is a rough Java program showing one pass over all input numbers to find the smallest number as described by the second algorithm. Java is a high-level language offering a lot of abstraction from the underlying hardware. So you can see how the program reads reasonably close to normal English.

Of course, if you miss any punctuation marks or brackets, the program will not work at all. Computers are EXTREMELY fussy about syntax (the precise way of writing a program in any language), and all languages have a different syntax.

Last updated